Generative AI’s Impact on Phishing Attacks

In 2023, generative AI will continue to have an increasingly significant impact on technology, as shown by ChatGPT in particular. Even though it is not fully new, this amazing technology has emerged as the next revolutionary force to change how we live and work. The growth of AI has not only brought up new opportunities, but also dangers, notably in the area of cybersecurity and the terrifying world of phishing assaults.

A New Era of Phishing

The ubiquitous accessibility of generative AI has given threat actors access to powerful tools that have substantially improved their email phishing capabilities. A recent research by Darktrace illuminated how email phishing tactics have changed in the age of generative AI. Since ChatGPT’s launch, the total volume of email phishing assaults has stayed mostly stable, although a significant change has taken place.

Phishers no longer depend on deceiving victims into clicking harmful links. As opposed to this, they now use a more sophisticated approach, putting more emphasis on linguistic complexity, such as text volume, punctuation, and sentence length. A 135% spike in “novel social engineering attacks” between January and February 2023, which occurred at the same time as ChatGPT’s broad usage, serves as evidence of this transition. This development raises worries because generative AI, such as ChatGPT, is enabling threat actors to design very effective and focused phishing assaults that impersonate authentic emails from reliable sources.

Evolution of Generative AI Phishing

Darktrace has more recently seen a change in phishing assaults between May and July of this year. Malicious emails no longer impersonate top executives; instead, they now pretend to be from the IT department. Attacker behaviour is characterised by continual adaptation in order to avoid discovery. While efforts to take over email accounts have climbed by 52% and impersonation of the internal IT staff has increased by 19%, VIP impersonation attacks, which impersonate top executives, have fallen by 11%.

Attackers are switching to impersonating IT staff to carry out their assaults as workers grow more alert to senior executive impersonation. The situation is made worse by the availability of generative AI technology, which gives attackers the means to design very convincing and sophisticated communications, including voice deep fakes, to effectively trick staff.

A Complex Landscape for Cyber Defense

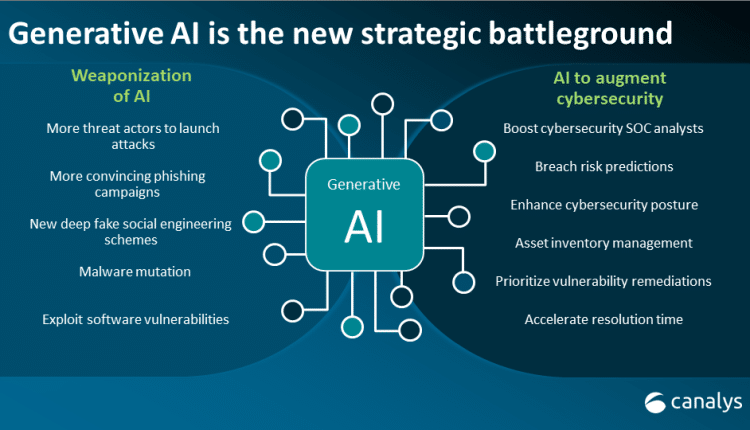

The biggest source of company risk is still email compromise, and generative AI has added another level of complexity to cyber defence. Trust in digital communications continues to decline as generative AI becomes increasingly pervasive across a variety of media, including pictures, audio, video, and text. There has never been a greater pressing need for effective cybersecurity measures.

The Role of Defensive AI

In the face of these difficulties, it is crucial to understand that AI is neither intrinsically good nor harmful; rather, the results depend on how humans use it. Cybersecurity teams may use the same AI technologies that give attackers the upper hand for good. Defensive AI can self-learn and analyse typical communication patterns since it has been programmed to comprehend the nuances of company behaviour and employee behaviour. It can distinguish between authentic and suspect emails by picking up on minute details like tone and sentence length.

The human defenders are the ones who will determine the outcome of this intellectual conflict in which human opponents will use AI as a weapon. Having AI that is more knowledgeable about your company than external, generative AI ever could is the answer to fending against hyper-personalized, AI-powered email assaults.

Conclusion

The cybersecurity conflict is ultimately a human-vs-human conflict. Whatever happens, there is always a threat actor working behind the scenes, and artificial intelligence is only a tool. We must jointly embrace AI as a potent partner in the fight against cyberattacks rather of only seeing it as a danger vector if we are to successfully combat AI-powered threats. We will have a fighting chance against the changing panorama of cyber dangers if we can create this equilibrium.